BigQuery

Estimated setup time30 minutes

Methods

There are 2 ways to send data to Aampe from BigQuery

1. Direct read access from your BQ dataset

This method is generally used when events data is exported from Firebase directly

Aampe will access your BQ with the a dedicated service account which we will provide to you:

To grant Aampe read-only access to your BQ dataset, you need to grant the following permissions to the Aampe service account above:

bigquery.datasets.get

bigquery.readsessions.create

bigquery.readsessions.getData

bigquery.tables.get

bigquery.tables.getData

bigquery.tables.listThe default BigQuery Data Viewer role (the closest role provided by Google to what Aampe needs) is more suited to internal read access in your company, and is therefore too broad to grant to an external company.

We recommend that you:

Create a custom role with the needed minimal permissions

a) Grant this role to the Aampe service account

b) Make sure you have the “role Administrator” and “Security Administrator” roles before you begin.

Step-by-step procedure

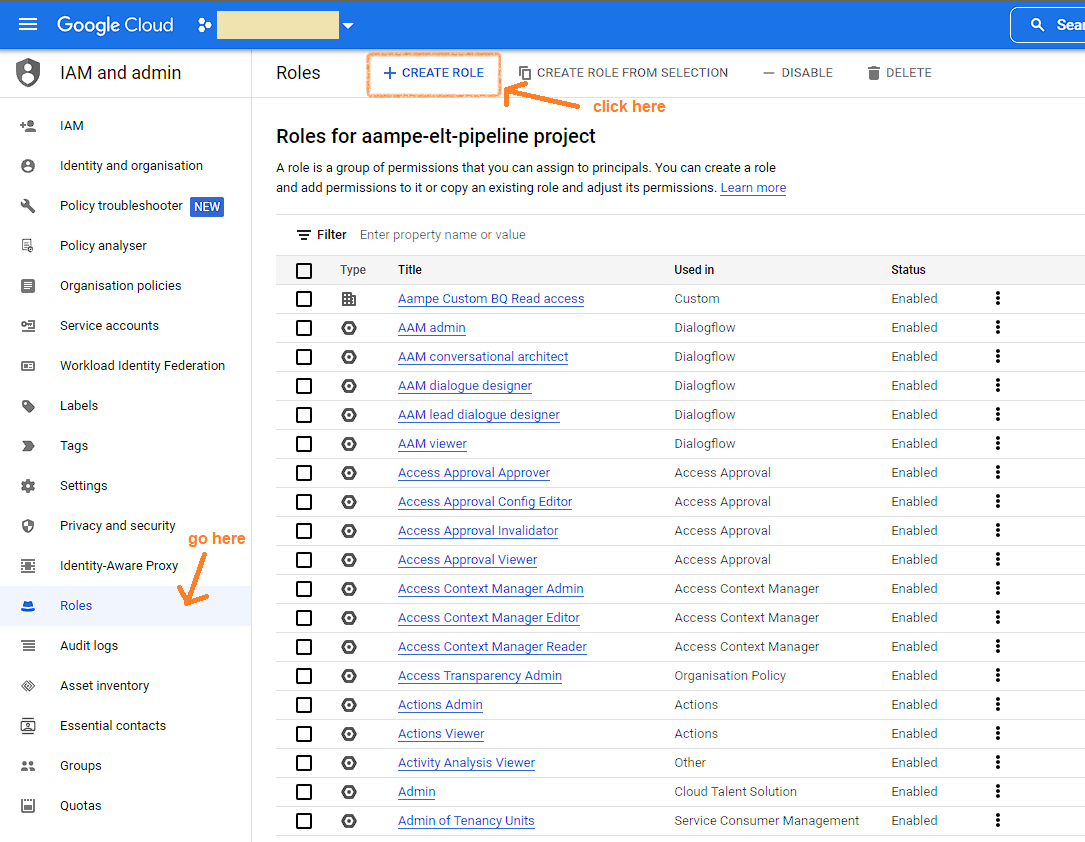

- Create the custom role:

Go to the uri: https://console.cloud.google.com/iam-admin/roles/create?project=<your-project-name>

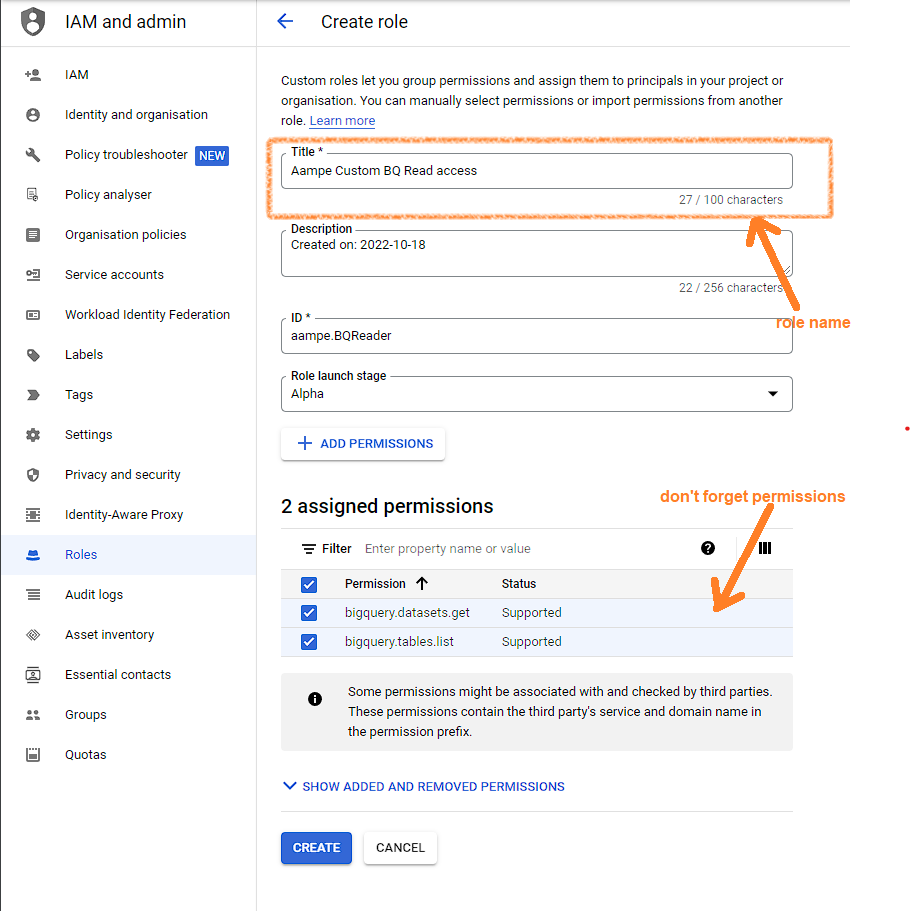

- Fill the form (title: Aampe Custom BQ Read Access)

- Add the following permissions

bigquery.datasets.get

bigquery.readsessions.create

bigquery.readsessions.getData

bigquery.tables.get

bigquery.tables.getData

bigquery.tables.listGrant permissions:

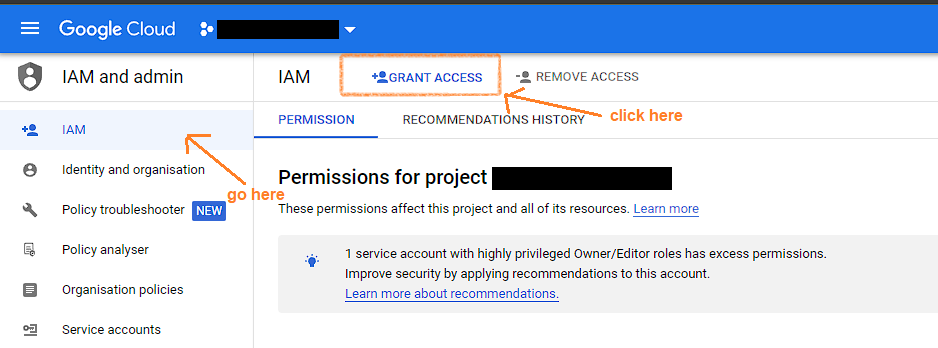

- Go to the uri: https://console.cloud.google.com/iam-admin/iam?project=<your-project-name>

- Press grant access button

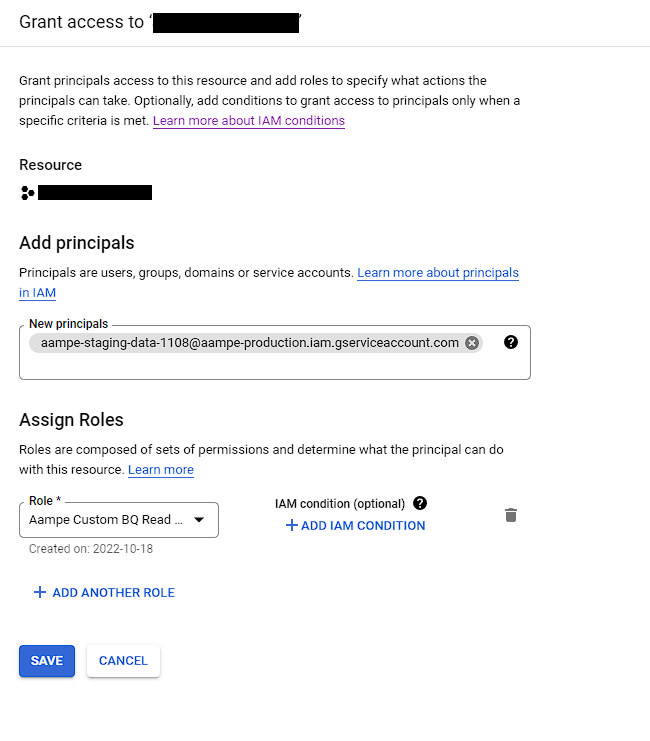

- Fill the form “add principal” with Aampe service account that we will provide you ( We will provide you the service account to use for integration via email)

- Fill the form “assign role” with Aampe Custom BQ Read Access

All you need to do is provide us with the following details now:

- Project ID

- Dataset ID

- Data location

- Table names (of all the tables that you would like to provide us data of)

2. Push to Google Cloud Storage bucket

When the data size is very large we recommend that you push data to a cloud storage bucket.

We will then copy this data from your GCS bucket to one set up by us for ingestion to our system.

If you prefer, you can directly push to a bucket created by us.

Steps

-

Create a bucket on S3/GCP.

-

Provide Aampe with access to that bucket (We will provide you the details of the account that you need to provide access to)

-

Set up export from BQ to cloud storage bucket : Export Data

-

Automate the export for each day of event data

For reference on data model and FAQ please refer to Data Models and Event stream

Updated 3 months ago